High emotional impact and the value of the journey are two big aspects of designing tech for major life events.

While at CHI, I got the wonderful opportunity to help organize the workshop on Designing Technology for Major Life Events along with Mike Massimi, Madeline Smith, and Jofish Kaye. We had a great group of HCI researchers with a diverse range of topics: gender transition, becoming a parent, dealing with a major diagnosis, bereavement, and more. My own interest in the topic grew from my experience designing technology for divorce and technology for recovery from addiction. In one of the breakout groups, we discussed the challenges of designing technology in this space and some of the ways we’ve dealt with these challenges in our work. In this post, I want to highlight a few of these:

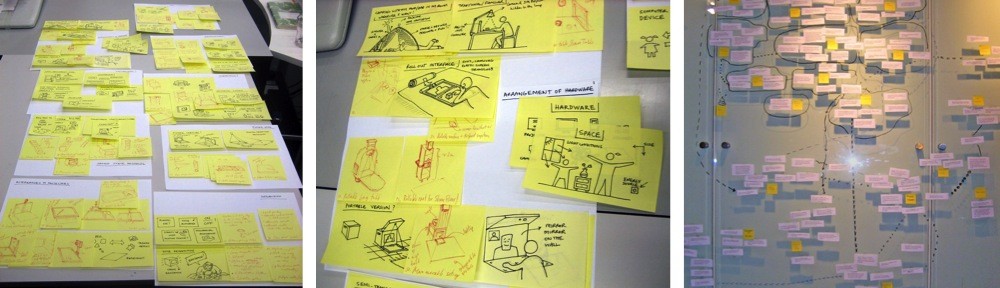

Building Tech is Risky. Building a system requires the designer to commit to specific choices and it’s easy to find something that wasn’t adequately considered after the fact. In tech for major life events, this challenge can be exacerbated because the consequences of a failed design might have big emotional repercussions (e.g., tech messing up some aspect of a wedding). Sometimes, it is a big question of whether we even should try to bring tech into a given context.

Ethics of Limited Access. Building technology to support a major life event may mean excluding those without the financial means, skills, motivation, language, etc. to use the provided intervention. Additionally, we frequently stop supporting a prototype technology at the end of the study which can be really problematic if it was providing ongoing benefits to the participants. Again, because of the high stakes involved, issues of ethics of access to technology may be exacerbated when designing for major life events.

Tension Between Building Your Own and Leveraging Existing. Many systems we build require some critical mass of adoption before they are really useful. This is particularly important with tech for major life events because there may be relatively few people facing a particular relevant context at any point in time. One of the ways to deal with this is to piggyback on existing systems (e.g., building a Facebook app instead of a new SNS), but this may cause problems when the underlying technology makes changes outside of the researcher’s control (e.g., privacy policies change, APIs stop being supported, etc.).

Asking the Right Questions about the System You Built. The final challenge is understanding what kinds of questions to ask during the system evaluation. On one hand, it is important to go into the evaluation with some understanding of what it would mean for the system to be successful and the claims you hope to make about its use. On the other hand, it is valuable to be open to seeing and measuring unintended side effects and appropriations of the technology.

I think my two major take-aways from this discussion were a greater appreciation of how difficult it is to actually build something helpful in this space and the insight that many of these problems can be partially addressed by getting away for the type of study that focuses on evaluating a single system design using a small number of metrics. The risks of committing to a specific design solution can be mitigated by providing multiple versions of the intervention, either to be tested side-by-side or to let participants play around until they decide which solution is a better option for them. The ethics of access can be ameliorated by providing low-tech and no-tech means of achieving the same goals that your high-tech approach may support (e.g., Robin Brewer built a system to let the elderly check email using their landline phones). Planning for multiple solutions when building using others’ APIs can lead to a much more stable final system (e.g., the ShareTable we could easily switch from the Skype API to the TokBox API for the face-to-face video). And lastly, the problem of figuring out what to ask during and after a system deployment can be addressed by combining quantitative methods that measure specific predicted changes with qualitative methods of interviewing and observation that are more open to on-the-fly redirection during the course of the study. Overall, diversity of offered solutions, flexibility under the hood of your systems, and diversity of methods used in the evaluation lead to a stronger study and understanding of the target space.